Minority Report is a reality? The technology that is being used in the name of predicting crime may prove just as problematic as the science fiction film and TV show, in which psychics known as “precogs” are able to predict crime and catch criminals before they actually commit a crime. In the new world of crime fighting, racism meets technology with potentially disastrous results–a high-tech white supremacy with amplified discrimination against Black people.

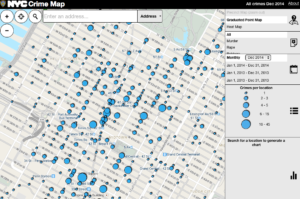

Jack Smith IV at Mic reported that tech companies are promoting a form of technology called crime mapping. Under the presumption that the numbers don’t lie, this multimillion-dollar industry claims to offer an alternative to old-school, “clunky” and potentially discriminatory policing methods in the form of fair, efficient data-driven methods.

“Today’s crime-prediction tech doesn’t identify suspects, like in Hollywood’s fictional pre-crime units,” Smith writes. “Instead, traditional digital policing uses maps that are historical, then layers it with on-the-ground knowledge to provide custom insights about how to change the behavior of police on their beats.”

This crime prediction mapping is being used in police departments and precincts throughout the nation, in many major cities such as New York, Los Angeles, Philadelphia and Miami. Critics argue that police already know where to find crime, and this technology amounts to “old wine in new bottles,” merely telling the police what they already know, with the result that police departments are spending millions of dollars on crime mapping systems that provide no new intelligence.

“Trying to predict future crimes based on hotspots just tends to show areas of high crime,” said Deputy Chief Jonathan Lewin, chief technology office of the Chicago Police Department in an interview with Mic.

Critics say the new technology could curb old-school policing and that it’s important for police to know and interact with their communities, a practice which could be come to a stop with an over-reliance on technology. Even worse, some are concerned that the new technology will be used to amplify racial inequality. For example, if the metrics rely on data generated by traditional, racially discriminatory policing methods—such as focusing on drug use in Black communities, although whites use drugs at a higher rate—a racist system will result.

“It gives the police a way out: ‘Oh, It’s not my cops that are profiling, we’re going based on the data,'” said Christopher Herrmann, a professor at the John Jay School of Criminal Justice and a former crime analysis supervisor for the NYPD. “Because it’s data-driven, it can exonerate the police. But the software is going to point them to the same places they’re already policing.”

“The data itself doesn’t remove the bias, it only exacerbates it, and reproduces the inequality that gave you the data in the first place,” said Malkia Cyril, executive director of the Center for Media Justice. “People keep turning to technology like it’s a savior. But you can’t insert technology into inequality and expect it not to produce inequality.”

Given that the tech sector is woefully lacking in diversity, it stands to reason that a lack of sensitivity and awareness of race may be reflected in their products and services. As Mic has reported, some infrared sensors on soap dispensers do not work with dark skin, while Google has been known to identify Black people as “gorilla” or “ape,” and the Xbox Kinect gaming system did not recognize the faces of dark-skinned gamers.

These examples reflect the problem of technology being manufactured for white use only, and not being developed with an eye toward addressing the diversity of their consumer base.