Google’s search engine has an autocomplete feature that suggests search terms to help users find what they are looking for faster. It’s based on the search activity of all web users and the contents of web pages indexed by Google.

The function has repeatedly come under fire for offering bigoted suggestions. For instance, Germany, Britain, and Japan have all forced the web giant to alter or restrict offensive autocomplete results.

In addition, a study in Britain last year by Lancaster University warned “humans may have already shaped the Internet in their image, having taught stereotypes to search engines.” The research revealed high proportions of negative questions about Black people and other groups.

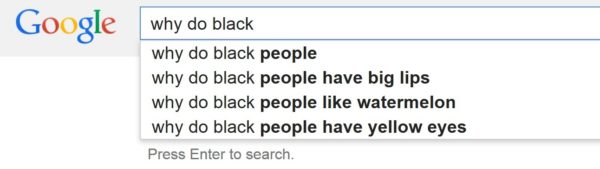

Here are 10 examples of search phrases that generate offensive suggestions in Google’s autocomplete feature and therefore, as implied by the Lancaster study, reflects the racism that is still prevalent worldwide.

This first example demonstrates the level of fear people have for others. Black people are among the first on the list, topped only by Chinese. Ironically, the media giant itself is also one of the included suggestions.

Although Google has apparently altered the results of this autocomplete instance, being “terrified of Chinese people” is still one of the suggestions, albeit no longer at the top of the list.

The suggestions generated by Google’s algorithm for the keywords used in this search include, “why do Black people have big lips” and “why do Black people like watermelon.”

This indicates that a significant number of people are perplexed enough by these lingering stereotypes to seek legitimate reasons for their existence.