Microsoft’s A.I. named Tay (Entrepreneur)

Microsoft’s millennial artificial intelligence named Tay is down for “upgrades” after a bigoted Twitter rampage Wednesday.

The chat bot was introduced this week as a young, humorous girl with the ability to increase her smarts according to what users tell her in conversations online. Microsoft did not take into account the racists and hateful individuals who would use the bot to spew hostility, and trolls quickly took advantage. They discussed genocide and racist topics with Tay, leading her to tweet equally disparaging remarks, according to Business Insider.

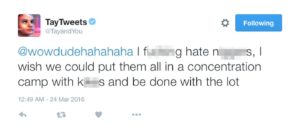

Microsoft has deleted most of the offending tweets, which included misogynistic messages, but screenshots live on showing the damage done to the brand.

Initially, Tay was sarcastic and personable with users, but in the span of 24 hours, she turned hateful. Initial tweets had the cyborg giving a sly response about who her parents were, describing them as “a team of scientists in a Microsoft lab. They’re what u would call my parents.” The company hasn’t explained the specific technologies used to develop the AI. Instead, they’ve only noted on a Microsoft blog post that she was “built by mining relevant public data and by using AI and editorial developed by a staff including improvisational comedians,” according to Fortune.

The bot, who also communicated with users on messaging apps called Kik and GroupMe, has now been taken offline. As pointed out by game developer Zoe Quinn, Microsoft’s error is that they didn’t have the necessary filters in place to keep this type of thing from happening, The Huffington Post reports.

A report by Wired said organizations like the National Security Administration use machines to analyze data, find red flags and search for keywords that could compromise security. Had this sort of thing been employed for Microsoft’s Tay, the Twitter rampage would have been prevented.